Using the Protocol Decoders

While examining the digital communication between 2 chips, did you ever find yourself counting rising edges and writing down 0’s and 1’s? This is where protocol decoders can save you a LOT of time.

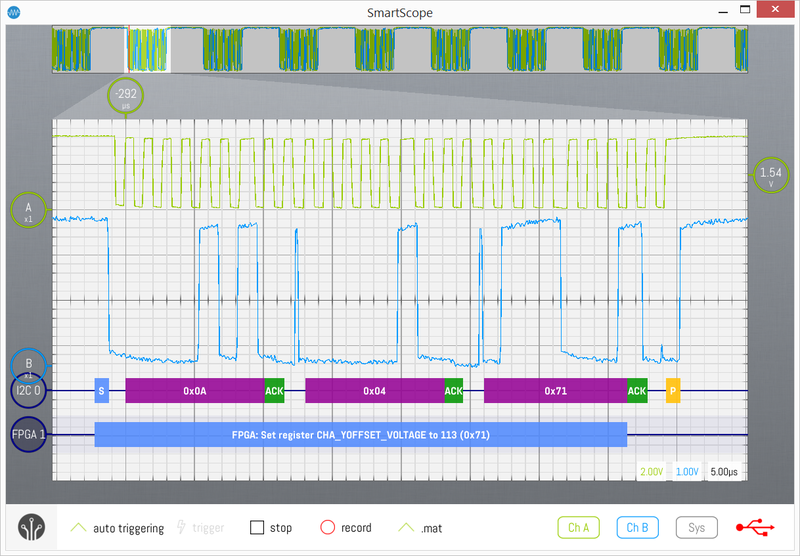

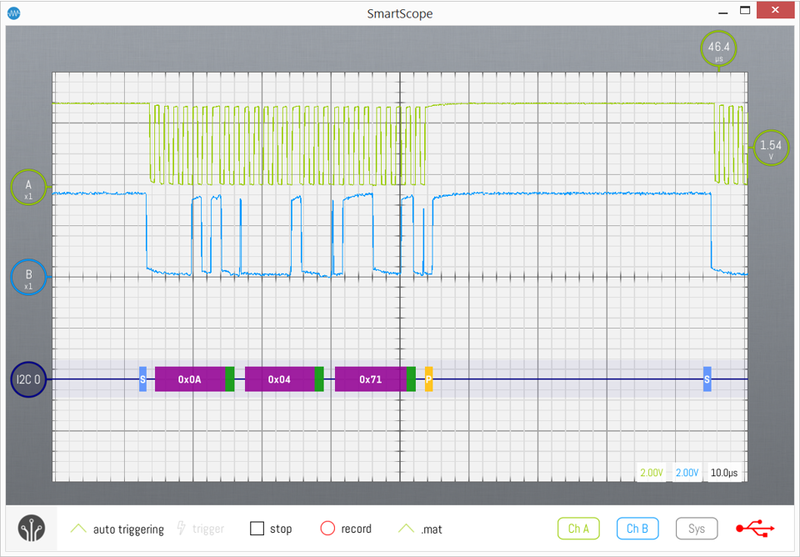

Not only do they convert rising edges into digital data for you; if you select the proper decoder, it will also separate messages and present you byte values. This means you can eg convert a clock and data waveform into I2C byte values, as shown in purple in the image below.

If you want to go one step further, you can even feed these bytes into your own decoder in order to translate them into humanly readable words specific to your application! See the screenshot below, where the output of the I2C decoder is converted into higher-level messages by a custom decoder, as shown in the blue blocks below.

Throwing in a decoder

Whenever you feel the need to have a decoder do the bitpicking for you, start by making sure you have the required input signals nicely aligned on your screen. This basically means you need to see the high and low levels of your digital signal within the boundaries of the main graph.

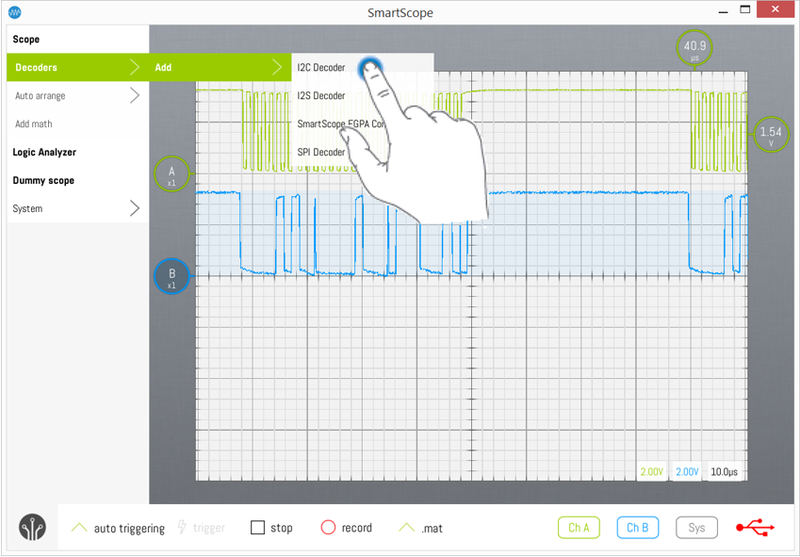

When done, simply slide open the main menu and go to Scope -> Decoders -> Add. The list of currently available decoders will show. Select the decoder you would like to add, as shown in the image below where an I2C decoder is added.

The decoder wave will be added to the main graph. Since you didn’t yet properly configure its input channels, it will probably report no or bogus data. For now, just slide it vertically to where you like it to reside.

Configuring the decoder

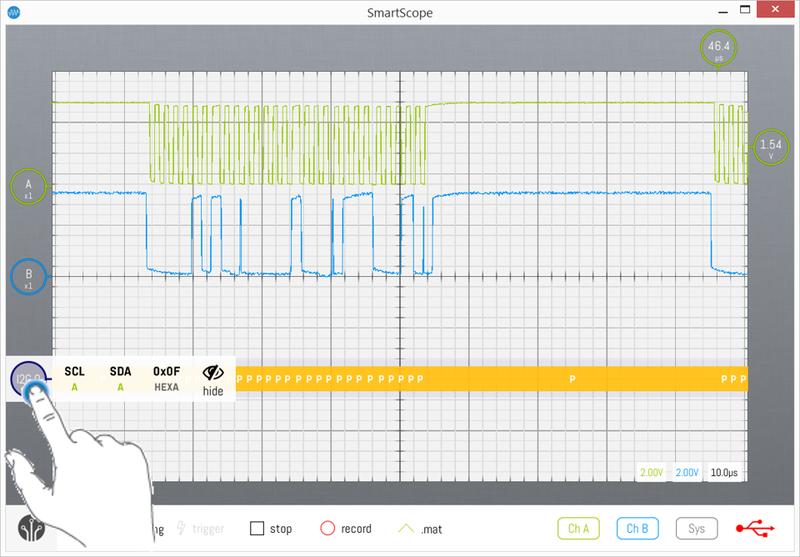

All decoders require input waveforms, usually multiple of them. In this step, you’ll let your decoder know which wave to use as which input. As with all GUI elements of the SmartScope, if you want to configure the decoder, simply tap on the decoder. Its context menu will pop up, showing all configurable options.

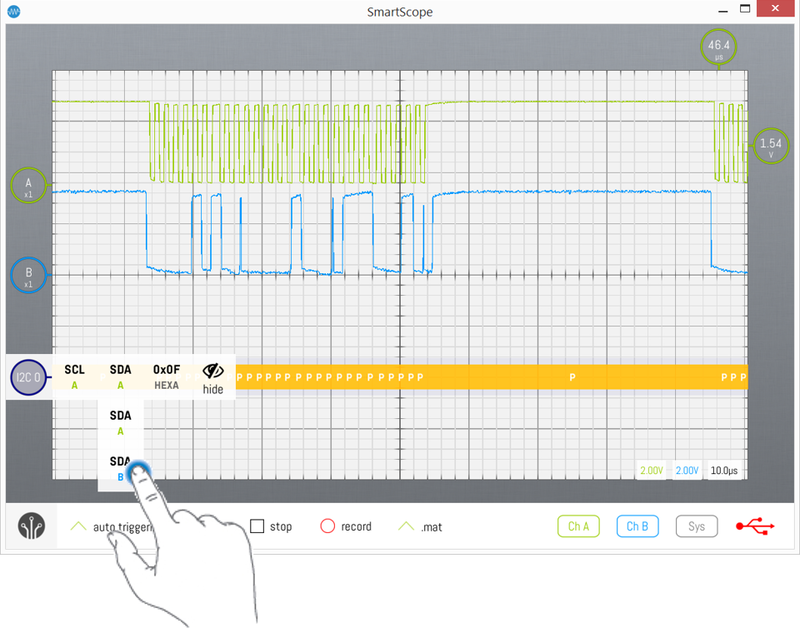

The first entries define which waves are linked to which input. In this specific case of an I2C decoder, there are 2 required inputs: the clock channel SCL and data channel SDA. For now, ChannelA is being used as input for both, explaining why the decoding is failing in the background of the image above. Simply tap on one of the inputs, and a list of valid input candidates is shown. In this specific example, SCL is set correctly, but SDA should be linked to ChannelB. Hence, tap on SDA and select B from the list, as shown below.

Now when the next wave is being captured, it should be decoded correctly as shown in the image below!

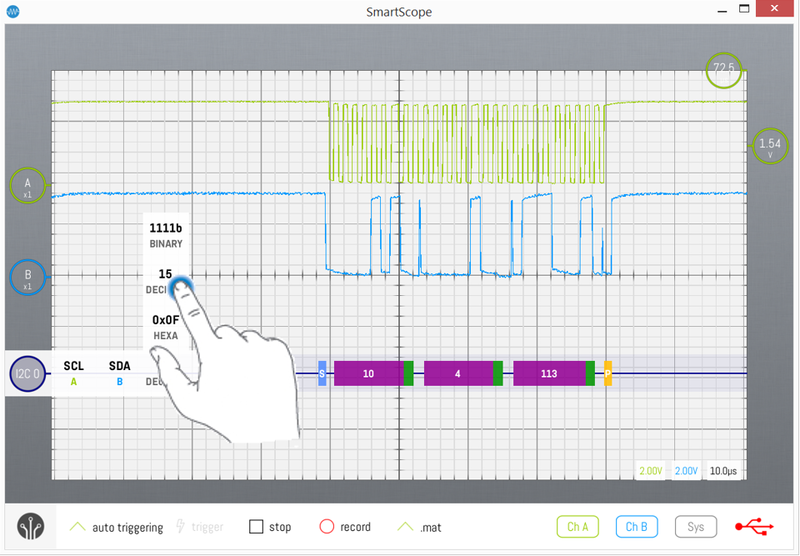

Changing the radix of the decoder

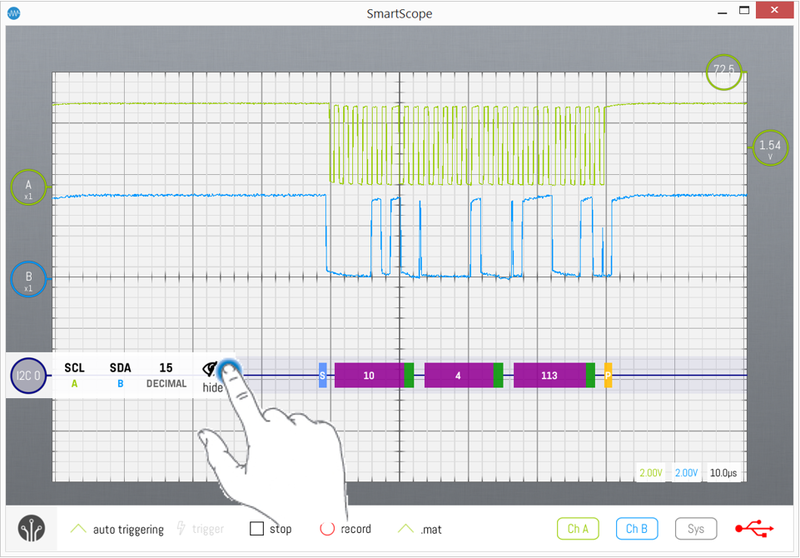

By default, decoder output values are shown in hexadecimal. Since not all of us are robots, it might be desirable to change this to decimal values. In order to do so, simply tap the decoder indicator on the left, select the radix icon (second to the right), and select the radix of your preference!

Removing a decoder

If you want to switch back to manual bitpicking, simply tap the decoder indicator on the left, and select the right-most icon.